Phase transitions may explain why SARS-CoV-2 spreads so fast and why new variants are spreading faster

J.C.Phillips, Marcelo A.Moret, Gilney F.Zebende, Carson C.Chow

Abstract

The novel coronavirus SARS CoV-2 responsible for the COVID-19 pandemic and SARS CoV-1 responsible for the SARS epidemic of 2002-2003 share an ancestor yet evolved to have much different transmissibility and global impact 1. A previously developed thermodynamic model of protein conformations hypothesized that SARS CoV-2 is very close to a new thermodynamic critical point, which makes it highly infectious but also easily displaced by a spike-based vaccine because there is a tradeoff between transmissibility and robustness 2. The model identified a small cluster of four key mutations of SARS CoV-2 that predicts much stronger viral attachment and viral spreading compared to SARS CoV-1. Here we apply the model to the SARS-CoV-2 variants Alpha (B.1.1.7), Beta (B.1.351), Gamma (P.1) and Delta (B.1.617.2)3 and predict, using no free parameters, how the new mutations will not diminish the effectiveness of current spike based vaccines and may even further enhance infectiousness by augmenting the binding ability of the virus.

https://www.sciencedirect.com/science/article/pii/S0378437122002576?dgcid=author

This paper is based on the ideas of physicist Jim Phillips, (formerly of Bell Labs, a National Academy member, and a developer of the theory behind Gorilla Glass used in iPhones). It was only due to Jim’s dogged persistence and zeal that I’m even on this paper although the persistence and zeal that ensnared me is the very thing that alienates most everyone else he tries to recruit to his cause.

Jim’s goal is to understand and characterize how a protein will fold and behave dynamically by utilizing an amino acid hydrophobicity (hydropathy) scale developed by Moret and Zebende. People have been developing hydropathy scores for many decades as a way to understand proteins with the idea that hydrophobic amino acids (residues) will tend to be on the inside of proteins while hydrophillic residues will be on the outside where the water is. There are several existing scores but Moret and Zebende, who are physicists and not chemists, took a different tack and found how the solvent-accessible surface area (ASA) scales with the size of a protein fragment with a specific residue in the center. The idea being that the smaller the ASA the more hydrophobic the residue. As protein fragments get larger they will tend to fold back on themselves and thus reduce the ASA. They looked at several thousand protein fragments and computed the average ASA with a given amino acid in the center. When they plotted the ASA vs length of fragment they found a power law and each amino acid had its own exponent. The more negative the exponent the smaller the ASA and thus the more hydrophobic the residue. The (negative) exponent could then be used as a hydropathy score. It differs from other scores in that it is not calculated in isolation based on chemical properties but accounts for the background of the amino acid.

M and Z’s score blew Jim’s mind because power laws are indicative of critical phenomena and phase transitions. Jim computed the coarse-grained hydropathy score (over a window of 35 residues) at each residue of a protein for a number of protein families. When COVID came along he naturally applied it to coronaviruses. He found that the coarse-grained hydropathy score profile of the spike protein of SARS-CoV-1 and SARS-CoV-2 had several deep hydrophobic wells. The well depths were nearly equal with SARS-CoV-2 being more equal than SARS-CoV-1. He then hypothesized that there was a selection advantage for well-depth symmetry and evolutionary pressure had pushed the SARS-CoV-2 spike to be near optimal. He argues that the symmetry allows the protein to coordinate activity better much like the way oscillators synchronize easier if their frequencies are more uniform. He predicted that given this optimality the spike was fragile and thus spike vaccines would be highly effective and that spike mutations could not change the spike much without diminishing function.

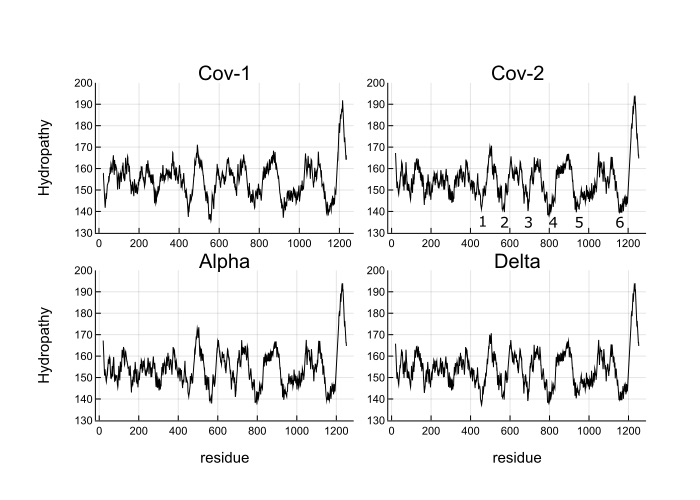

My contribution was to write some Julia code to automate this computation and apply it to some SARS-CoV-2 variants. I also scanned window sizes and found that the well depths are most equal close to Jim’s original value of 35. Below is Figure 3 from the paper.

What you see is the coarse-grained hydropathy score of the spike protein which is a little under 1300 residues long. Between residue 400 and 1200 there are 6 hydropathic wells. The well depths are more similar for SARS-CoV-2 and variants than SARS-CoV-1. Omicron does not look much different from the wild type, which makes me think that Omicron’s increased infectiousness is probably due to mutations that affect viral growth and transmission rather than spike binding to ACE2 receptors.

Jim is encouraging (strong arming) me into pushing this further, which I probably will given that there are still so many unanswered questions as to how and why it works, if at all. If anyone is interested in this, please let me know.